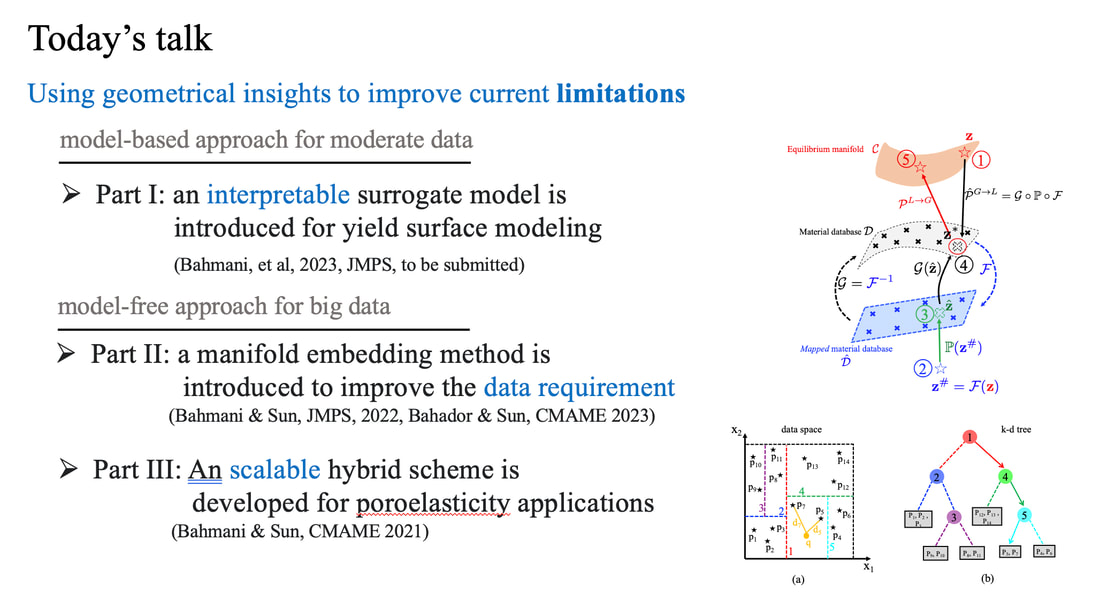

PhD student Bahador Bahmani passed the qualification exam and won the Dongju Lee '03 Memorial Award5/26/2023 Our PhD student Bahador Bahmani has passed the qualification exam at Columbia University. His proposed dissertiation "Manifold-embedding data-driven mechanics" focuses on applying manifold learning to enable robust model-free simulations. He has published 6 journal articles, including two papers in CMAME and one in JMPS on the topic of data-driven simulations.

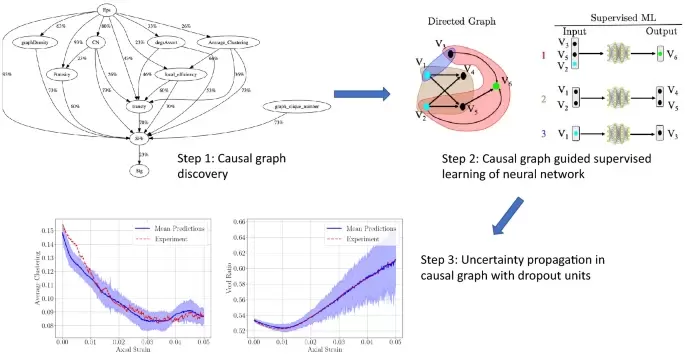

In addition, he has also collaborated in our collaborative NSF project and MURI projects and published papers on causal discovery of granular physics, equviariant geometric learning for digital rock physics, and Generative Adversartial Network for generating microstructures (see below).

The Dongju Lee memorial award is given in recognition of Bahador's achievement and in honor of his integrity, curiosity and creativity. The Dongju Lee Memorial Award and Memorial Lecture were established with a generous contribution from the Lee Family. Congratulations, Bahador for your outstanding achievement!

0 Comments

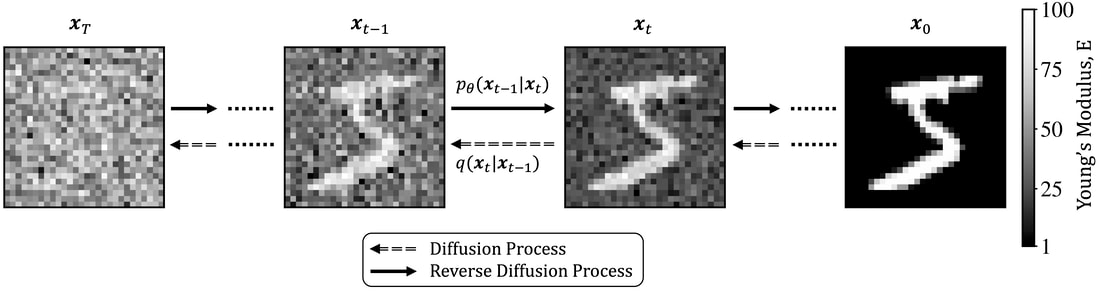

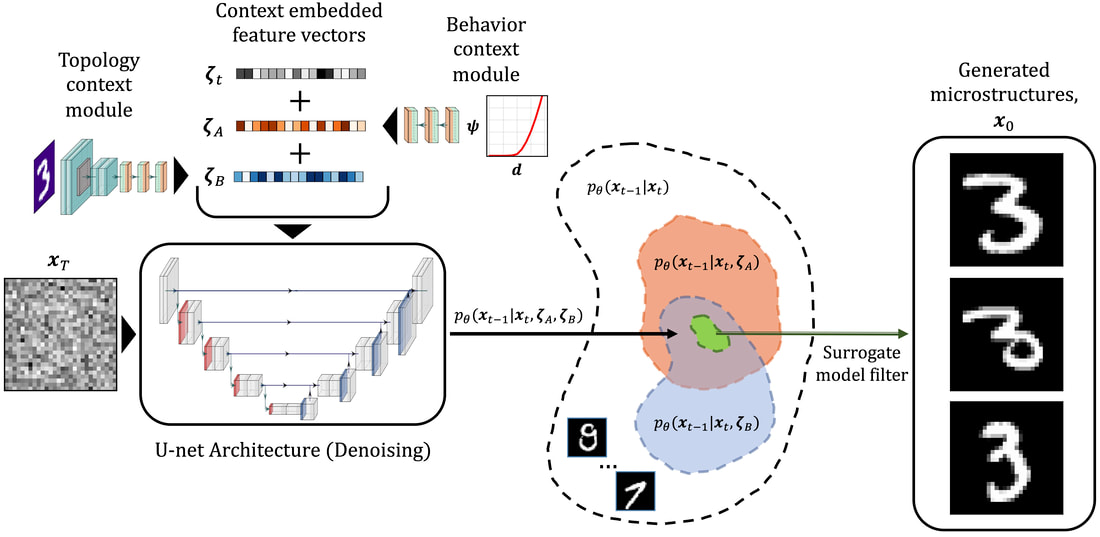

The paper preprint is available at [URL] In this paper, we (first author = Nick Vlassis) introduce a denoising diffusion algorithm to discover microstructures with nonlinear fine-tuned properties. Denoising diffusion probabilistic models are generative models that use diffusion-based dynamics to gradually denoise images and generate realistic synthetic samples. By learning the reverse of a Markov diffusion process, we design an artificial intelligence to efficiently manipulate the topology of microstructures to generate a massive number of prototypes that exhibit constitutive responses sufficiently close to designated nonlinear constitutive responses. While the unconditional diffusion described in the previous section can readily generate microstructures consistent with the training data set, our goal is to design microstructures that exhibit prescribed mechanical behaviors. To achieve this goal, we use a conditional diffusion process which fine-tunes the resultant microstructures via feature vectors. To identify the subset of micro-cstructures with sufficiently precise fine-tuned properties, a convolution neural network surrogate is trained to replace high-fidelity finite element simulations to filter out prototypes outside the admissible range. Results of this study indicate that the denoising diffusion process is capable of creating microstructures of fine-tuned nonlinear material properties within the latent space of the training data. More importantly, this denoising diffusion algorithm can be easily extended to incorporate additional topological and geometric modifications by introducing high-dimensional structures embedded in the latent space. Numerical experiments is conducted via the open-source mechanical MNIST data set created by Prof. Lejeune research group (See below). Consequently, this algorithm is not only capable of performing inverse design of nonlinear effective media, but also learns the nonlinear structure-property map to quantitatively understand the multi-scale interplays among the geometry, topology, and their effective macroscopic properties.

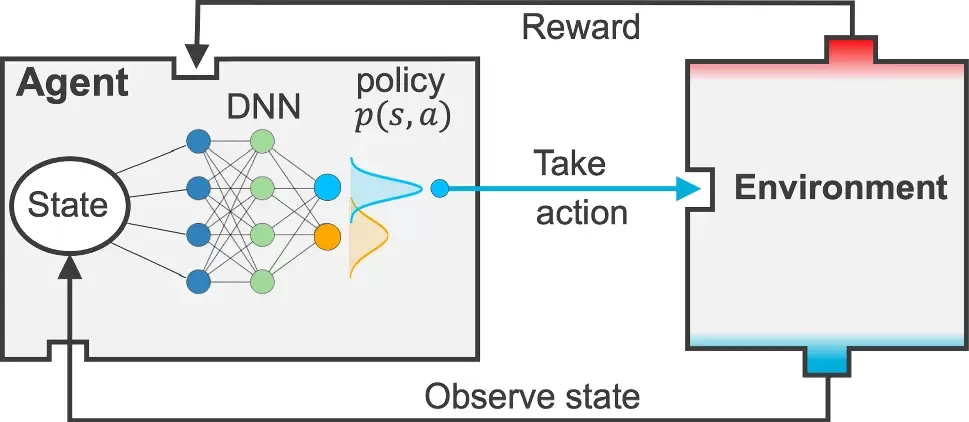

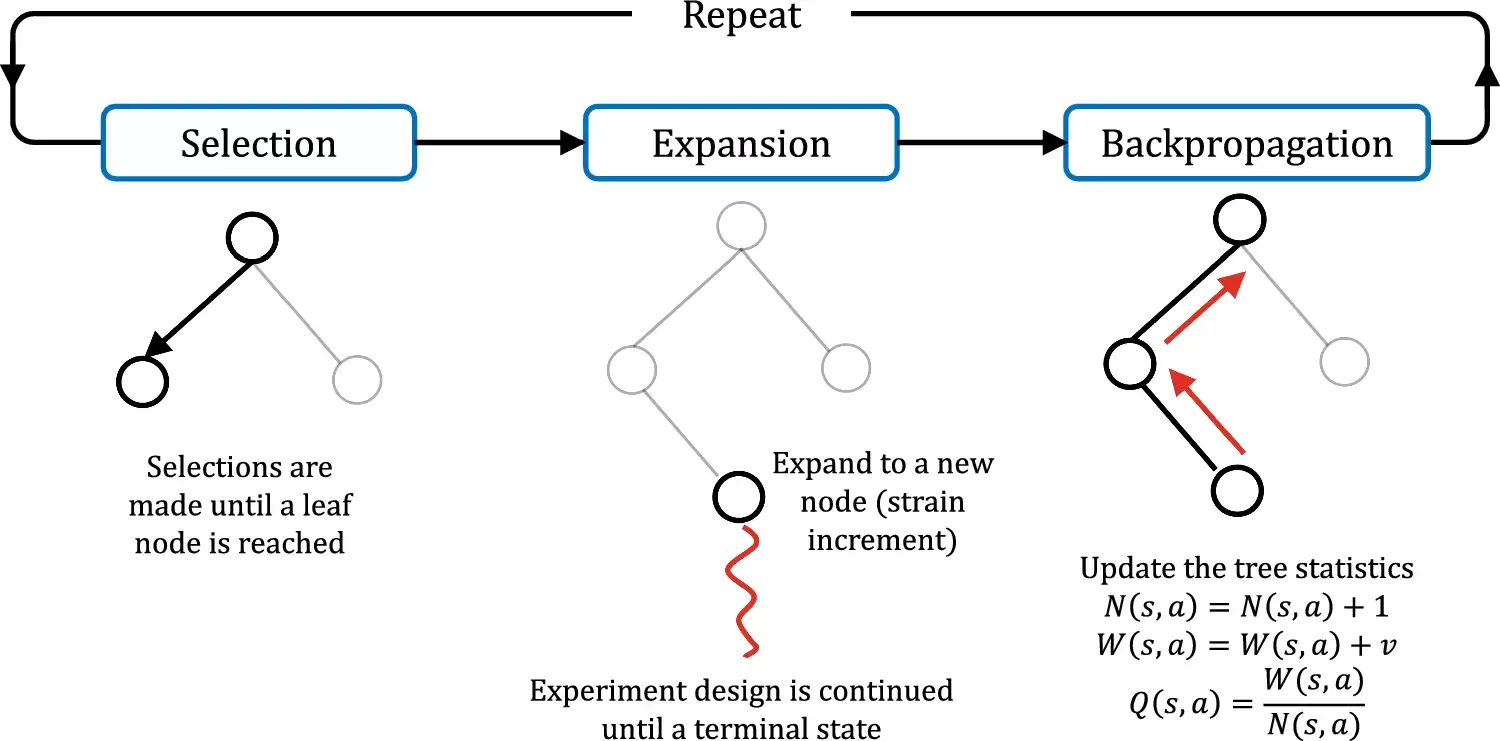

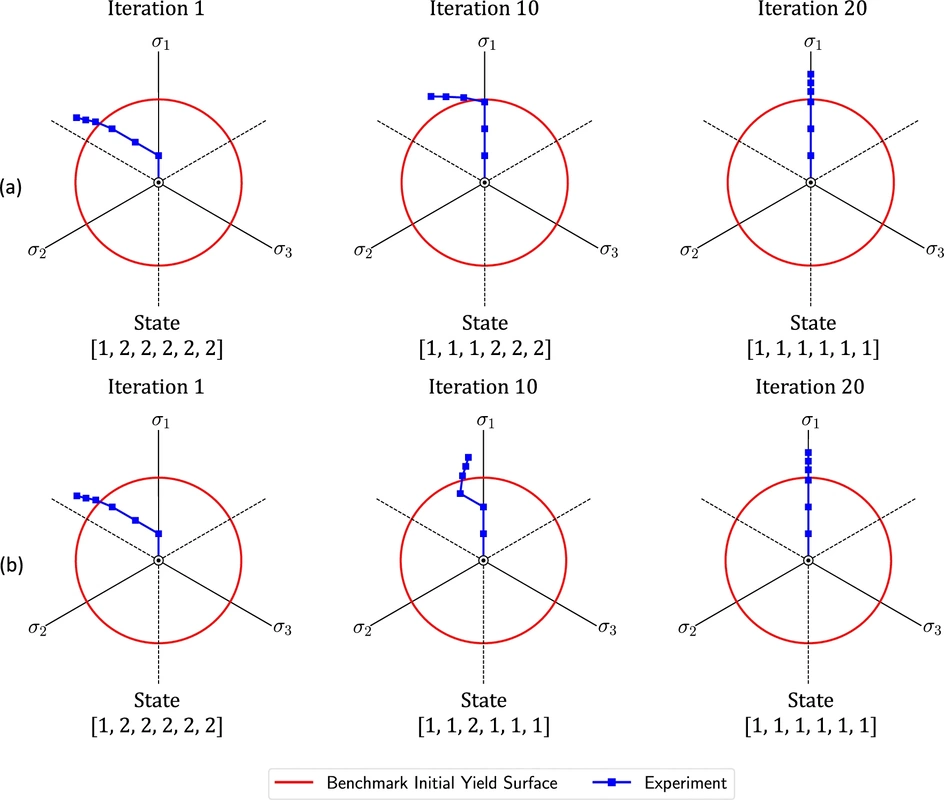

Our collaborative work with Sandia funded by the LDRD project led by Dr. Sharlotte Kramer has just published in the special issue "Machine Learning Theories, Modeling, and Applications to Computational Materials Science, Additive Manufacturing, Mechanics of Materials, Design and Optimization". The article is available in the Computational Mechanics website [URL]. Experimental data are often costly to obtain, which makes it difficult to calibrate complex models. For many models an experimental design that produces the best calibration given a limited experimental budget is not obvious. This paper introduces a deep reinforcement learning (RL) algorithm for design of experiments that maximizes the information gain measured by Kullback–Leibler divergence obtained via the Kalman filter (KF), see figure below. This combination enables experimental design for rapid online experiments where manual trial-and-error is not feasible in the high-dimensional parametric design space. We formulate possible configurations of experiments as a decision tree and a Markov decision process, where a finite choice of actions is available at each incremental step. Once an action is taken, a variety of measurements are used to update the state of the experiment. This new data leads to a Bayesian update of the parameters by the KF, which is used to enhance the state representation. In contrast to the Nash–Sutcliffe efficiency index, which requires additional sampling to test hypotheses for forward predictions, the KF can lower the cost of experiments by directly estimating the values of new data acquired through additional actions. In this work our applications focus on mechanical testing of materials. Numerical experiments with complex, history-dependent models are used to verify the implementation and benchmark the performance of the RL-designed experiments.

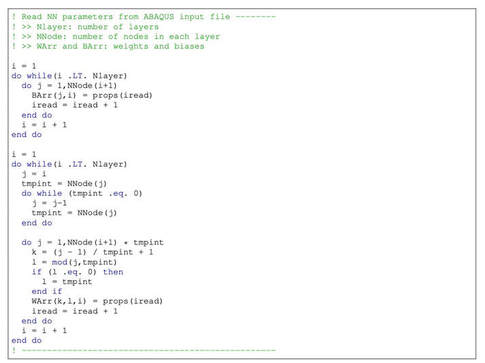

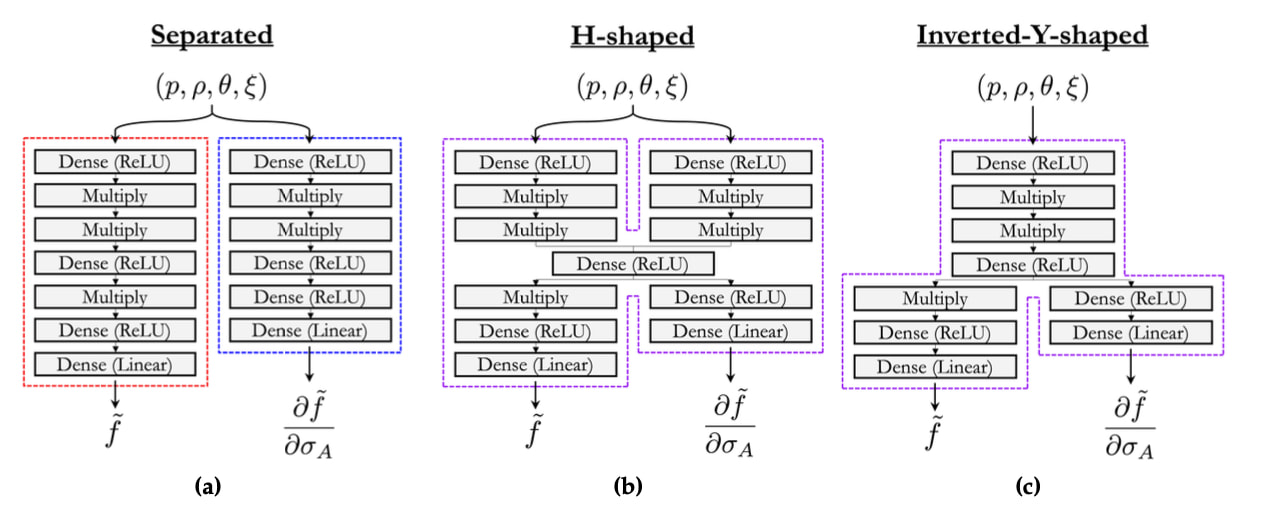

.Our collaborative research with Sandia National Laboratories on pyTorch-UMAT implementation for machine learning models has been accepted by Mechanics of Materials (See preprint [PDF]). This paper introduces a publicly available PyTorch-ABAQUS deep-learning framework of a family of plasticity models where the yield surface is implicitly represented by a scalar-valued function. Our goal is to introduce a practical framework that can be deployed for engineering analysis that employs a user-defined material subroutine (UMAT/VUMAT) for ABAQUS, which is written in FORTRAN (see below) To accomplish this task while leveraging the back-propagation learning algorithm to speed up the neural-network training, we introduce an interface code where the weights and biases of the trained neural networks obtained via the PyTorch library can be automatically converted into a generic FORTRAN code that can be a part of the UMAT/VUMAT algorithm. To enable third-party validation, we purposely make all the data sets, source code used to train the neural-network-based constitutive models, and the trained models available in a public repository. See the link below: https://github.com/hyoungsuksuh/ABAQUS_NN A variety of options (see below) of NN architecture has been pre-trained (see below).. Benchmark material point simulations and finite element simulations in ABAQUS has been provided in the repository. Please feel free to modify the codes and we would appreciate that if you can cite this paper if you use it for your own research. Note: we are actively developing this repository which may contain bugs. If you encounter a bug, please let us (Hyoung Suk Suh, [email protected]; WaiChing Sun, [email protected]) know. Please cite our work if you use it for your own research. We hope that this small tool can encourage and help more researchers from the ABAQUS ecosystem to build their own neural network model. Thank you! Reference:

|

Group NewsNews about Computational Poromechanics lab at Columbia University. Categories

All

Archives

July 2023

|

RSS Feed

RSS Feed